Reaching back to McLuhan, if it were true that the medium is indeed the message then the potential benefits of the web for society would be almost certainly attainable. But if we look at the medium itself as a symbolic construction created by society or parts of it, then we can start to appreciate how fragile the potential of the web really is. The web is not a technologically determined panacea. It is a bundle of technologies that will be socially constructed to fit whatever niche society needs it to. The real question is, what forces are determining how the web is constructed? (McQuivey, 1997, 3)

…technology is a social construction. Its design, organization, and use reflect the values and priorities of the people who control it in all its phases, from design to end use. After the design has been implemented, the system organized, and the infrastructures put in place, the technology then becomes deterministic, imposing the values and biases built into it. (Menzies, 1996, 27)

[H]ave you seen the movie Henry Fool? Just saw it last night. It’s about a fellow who can’t get print magazines to publish his poetry because they consider it too pornographic, too crude, but he has it published on the Internet, and he becomes a huge celebrity. Just thought you’d find it of interest. (Wu, 1998, unpaginated)

In the beginning was the word.

Initially, the word was spoken. In the bible, the word of god breathes life into the universe. In its oral form, the word was (and remains) a living thing, an ever-changing repository of tribal wisdom handed down from generation to generation.

Print changed everything. The word was no longer elusive, but fixed. In such a form, it no longer relied on the memory of people for its existence; it now had a form independent of those that created it. Fixed between two covers, the word, which had once belonged to the entire tribe, could now be ascribed to a single author.

In its new form on the page, the word began to take on a life of its own: now, the people who had created it would look to the word for wisdom. Books could be consulted long after their authors had died, and the information in them would always be the same. New types of knowledge could now be built on solid foundations which could not exist when the word was in its evanescent oral form.

Digital technologies give the word a new form. Or, do they? The differences between the spoken word and the written word are obvious: one is based on the sounds made through exhalations of air, the other is made by the imprint of abstract characters on paper. The difference between analogue and digital forms of the word are less obvious. You may be reading this dissertation in print form, or you may be reading it on a computer. In both cases, the same words (approximately 150,000 of them), in the same sequences, grow into the same sentences. The sentences follow one another to create exactly the same paragraphs, paragraphs become chapters, and the chapters follow, one upon the other, to create the whole. The word is the same.

Yet, it is different. Print is portable; you can read a book anywhere you can carry it. The advent of electronic books notwithstanding, digital media are bulky and difficult to transport, requiring the reader to go to them. Print pages are easy on the eyes; computer screens are difficult for many people to read from for long periods of time. On the other hand, it’s not always easy to find a specific passage in a print book, while a simple word search can usually take a reader to a specific place in a digital document almost instantaneously. Print requires a lot of effort to copy and send to others; digital text is trivially easy to copy and send to others. In fact, while the printed word is fixed, the digital word is constantly changing. Our experience of the word in these two forms is substantially different.

But the differences do not end there. An entire industry has developed over the centuries whose goal is to deliver the printed word from its creator to its reader. This includes: printing presses; publishing houses; bookstores, and; vast distribution networks. The electronic word, by way of contrast, takes a completely different route from creator to reader. This route includes: Internet Service Providers; telephone or cable companies, and; computer hardware and software manufacturers. The social structures which have developed to disseminate the word in its print and digital forms are substantially different. This difference is one of the primary subjects of this dissertation.

The word’s most enduring form, one which cuts across all media, is the story. In oral cultures, the story was both a way of communicating the history of the tribe and teaching each new generation the consequences of disobeying its moral imperatives. (An argument can be made that the modern equivalent of the oral story, urban myths, serve this second purpose as well.) Print enhances story by ensuring that it does not change from generation to generation, creating the possibility of a dialogue between the present and representations of the past (most often engaged in literary scholarship, although we all engage in this process when we read a book which was published before we were born).

With the rise of print, story undergoes an amoeba-like bifurcation, splitting into the categories of “fiction” and “non-fiction.” Non-fiction stories are based on real people and events, making the claim to be based on verifiable facts, a claim we mostly accept. This dissertation is a non-fiction story. Fiction stories, by way of contrast, are populated by characters who never lived, having adventures which are the stuff of imagination. Oral stories do not make such a distinction: real and made-up characters interact with scant regard to historical accuracy; embellishments added from generation to generation change the story until it bears little resemblance to its original version. With oral stories (as with fictional print stories), the test of their value is not verifiability, but the “truth” they speak to the human heart.

I am most interested in the production of fictional stories. Casually surfing the World Wide Web, one can sometimes come across a piece of fiction. Fictional stories are easy enough to find if one is looking specifically for them, hundreds upon hundreds. This observation has led me to suspect that the introduction of networked digital technologies, particularly the Web, is changing the nature of publishing.

This dissertation will explore the phenomenon of fiction writers who place their stories on the World Wide Web. To do so, I will attempt to answer a series of questions about the subject. Who are the people putting their fiction online? What do they hope to accomplish with digital communication media that they could not accomplish with traditional publishing media? What are the features of the World Wide Web which either help them further or keep them from achieving their goals? What other groups have an interest in this technology? How will those other groups’ actions in furthering their own goals affect the efforts of individuals putting their fiction online?

To answer these questions, we need a general idea of how technology interacts with human desire, how technology shapes and is shaped by human goals. This theoretical understanding can then be applied to the specific situation under consideration. So, to begin, we must ask ourselves: what is the relationship between technology and society?

Thesis: Technological Determinism

There is a school of thought which claims that technological innovations change the way individuals see themselves and each other, inevitably leading to changes in social institutions and relations. This possibility was delineated most directly by Marshall McLuhan, who wrote, “In a culture like ours, long accustomed to splitting and dividing all things as a means of control, it is sometimes a bit of a shock to be reminded that, in operational and practical fact, the medium is the message. This is merely to say that the personal and social consequences of any new medium — that is, of any extension of ourselves — result from the new scale that is introduced into our affairs by each extension of ourselves, or by any new technology.” (1964, 23)

This belief is known as technological determinism. It’s precedents lie in the work of Harold Innis (1951), a mentor of McLuhan’s at the University of Toronto. Current proponents of the idea include Derrick de Kerckhove (1997) and Christopher Dewdney (1998); the theory’s most vociferous champions can be found in the pages of Wired magazine (which claims McLuhan as its “patron saint”).

Technological determinism offers important insights into how technologies affect society. However, there are at least three problems with the way in which deterministic theories posit the relationship between technology and society which diminish their usefulness.

1) Deterministic theories do not have anything to say about how technologies are developed before they enter society. There may be some validity to the position that technologies take on a life of their own once they are introduced into the world; they certainly make some options for their use more likely than others (you would find it hard to talk to a friend in a distant city if the only machine at your disposal was a coffeemaker, for example). However, technological determinists do not address issues such as the assumptions which go into the development of technologies, or even why research into some technologies is pursued by industry and the academy while research into other technologies is not.

2) Deterministic theories do not have anything to say about what happens when competing versions of the same technology are introduced into a society. We can all agree that the VCR changes people’s television viewing habits (by allowing them: to watch programs on their schedules, not those of the network; to fast forward through commercials; to avoid scheduling conflicts by watching one program while taping another; etc.) It is less clear, from a purely deterministic point of view, why VHS machines should have captured the marketplace rather than Beta. Yet, one system will arguably have somewhat different effects on society than the other.

3) Deterministic theories do not have anything to say about the ongoing development of technologies after they have been introduced into society. Anybody who has purchased a computer in the last few years knows that memory and computing speed increase on a regular basis, which allows for increasingly sophisticated software. If you were to be charitable, you could say that we are in a state of constant innovation (if you were to be cynical, you could say that we were in a state of constant built-in obsolescence). Improvements in memory and computing speed which allow for increasingly sophisticated software are not merely changes of degree, but, in fact, change the nature of computing. For example, one important threshold was passed when processors became powerful enough to make a graphical interface feasible, making computer users less dependent on line commands. Prior to this, only experts with the time to learn all of the esoteric commands of the computer could use it; now, of course, it is not necessary to be a programmer to use a computer. Other thresholds might include networking computers and the ability to call up video, both of which involve incremental changes in existing technologies which hold the potential to change the way the technology is used, and, in turn, affects individuals and human relationships. [1]

Antithesis: Social Constructivism

Any theory attempting to draw a relationship between technology and society must explain how technologies develop, both before and after they are introduced into the marketplace. Such a theory must take into account the fact that decisions about how technologies are developed and employed, are made by human beings. As David Noble describes it,

And like any human enterprise, it [technology] does not simply proceed automatically, but rather contains a subjective element which drives it, and assumes the particular forms given it by the most powerful and forceful people in society, in struggle with others. The development of technology, and thus the social development it implies, is as much determined by the breadth of vision that informs it, and the particular notions of social order to which it is bound, as by the mechanical relations between things and the physical laws of nature. Like all others, this historical enterprise always contains a range of possibilities as well as necessities, possibilities seized upon by particular people, for particular purposes, according to particular conceptions of social destiny.” (1977, xxii)

Different individuals will want to use technology for their own purposes; where such purposes conflict, how the technology develops will be determined by the outcome of the negotiations around the conflict. This view of technological development is part of a field known as social constructivism.

One traditional way of looking at technological development is that scientists are given a research problem and, through diligent work, find the best solution to the problem. Social constructivists begin by arguing that “social groups give meaning to technology, and that problems…are defined within the context of the meaning assigned by a social group or a combination of social groups. Because social groups define the problems of technological development, there is flexibility in the way things are designed, not one best way.” (“Section 1 Introduction,” 1987, 12) The myth of “the one best way” creates a linear narrative of technological development which tends to obscure the role negotiation plays. It has also allowed historians of technology to avoid the issue of the interests of scientists in the work they pursue, giving them the chance to portray scientists as disinterested “seekers after truth.”

To understand the contested nature of technology, it is first necessary to define what are known in the literature as “relevant social groups.” “If we want to understand the development of technology as a social process, it is crucial to take the artifacts as they are viewed by the relevant social groups.” (Bijker, 1995, 49) How do we draw the line between relevant and irrelevant social groups for a given technology? Groups who have a stake in the outcome of a given technology can be said to be relevant; for instance, relevant stakeholders in the development of interstate highways would include auto manufacturers and related industries, construction companies, rail companies, drivers groups and the like, because each group’s interests will be affected. It is hard to see, by way of contrast, the stake which beekeepers or stamp collectors hold in interstate highways. Because they describe the same thing, more or less, I will be using the terms “stakeholders” and “relevant social groups” interchangeably throughout this dissertation.

What actually holds groups together? That is to say, what is their common stake? Social constructivists suggest that they share a common way of defining and/or using a technology, a “technological frame.”

A technological frame is composed of, to start with, the concepts and technique employed by a community in its problem solving… Problem solving should be read as a broad concept, encompassing within it the recognition of what counts as a problem as well as the strategies available for solving the problems and the requirements a solution has to meet. This makes a technological frame into a combination of current theories, tacit knowledge, engineering practice (such as design methods and criteria), specialized testing procedures, goals, and handling and using practice. (Bijker, 1987, 168)

In later writing, Bijker would argue that the concept of the technological frame should apply to all relevant social groups, not just, as one might think from the above quote, engineers. (1995, 126) To this list, then, we might wish to add additional qualities for consumers of technology, qualities such as existing competing technologies, availability, cost, learning curve, et al. No one list of attributes will describe the technological frame of every possible relevant social group, but every relevant social group will have its own technological frame.

The impetus for technological change begins with a problem. This makes sense: if there were no problem, there would be no reason to change the status quo. The farmer who wants to get his or her produce to a wider potential market will embrace solutions like a better interstate highway system. This may give the mistaken impression, however, that scientists respond to the real world problems of others. In fact, as will become apparent in Chapter Three, it sometimes happens that the only stakeholder with an interest in the development of a technology is the corporation which is developing that technology, and the problem to be solved is the rather prosaic one of how to increase the company’s profits.

Our common sense understanding of the world would suggest that a technological artifact has a single definition upon which all can agree. A car is a car is a car is a car… Yet, constructivists would argue that, seen through the filter of different technological frames, artifacts can be radically different. “Rather, an artifact has a fluid and ever-changing character. Each problem and each solution, as soon as they are perceived by a relevant social group, changes the artifact’s meaning, whether the solution is implemented or not.” (ibid, 52) A car means one thing to a suburbanite who needs it to get to work; it means quite another thing to a member of MADD (Mothers Against Drunk Driving) who has lost a child in an automobile accident. The same artifact which is a solution to the problem of one relevant social group may, in fact, be a problem which needs a different solution to the member of another relevant social group. This is a direct challenge to the “one best way” model of scientific development, since it suggests that whether or not a technology “works” depends upon how you have defined the problem it is intended to solve. As Yoxen puts it, “Questions of inventive success or failure can be made sense of only by reference to the purposes of the people concerned.” (1987, 281)

This multiplicity of interpretations, or actual artifacts, does not, cannot last. We require technologies to work in the real world; therefore, they must, at some point, take a

fixed form. The process by which this happens is referred to by social constructivists as “closure.”

Closure occurs in science when a consensus emerges that the ‘truth’ has been winnowed from various interpretations; it occurs in technology when a consensus emerges that a problem arising during the development of technology has been solved. When the social groups involved in designing and using technology decide that a problem is solved, they stabilize the technology. The result is closure. (“Section 1 Introduction,” 1987, 12)

That is to say, when there is general agreement on the nature of a technology, when different stakeholder groups adopt a common technological frame, the technology takes on a fixed form. It is possibly that various frames will be combined before closure occurs, but, “Typically, a closure process results in one relevant social group’s meaning becoming dominant.” (Bijker, 1995, 283)

Occasionally, the process of technological innovation is compared to Darwin’s theory of natural selection. “[P]arts of the descriptive model can effectively be cast in evolutionary terms. A variety of problems are seen by the relevant social groups; some of these problems are selected for further attention; a variety of solutions are generated; some of these solutions are selected and yield new artifacts.” (ibid, 51) This theoretical analogy is misleading, however: nature is unconcerned with the outcome of the struggle between species for survival, but relevant social groups are, by definition, concerned with the struggle between different forms of technology for supremacy in the marketplace. Natural selection is blind and directionless, whereas technological selection is interested and purposefully directed.

There are problems with social constructivism. They include:

1) Constructivists have nothing to say about what happens after a technology is introduced into a society. The problem goes beyond the fact that most of us have experienced significant changes to our lives as a result of our introduction to new technologies, an experience which would give us an intuitive sense that technology does have effects once people start using it. Constructivists ignore the consequences of the actions of the groups they study, devaluing their own work. It is interesting, to be sure, to know how Bakelite or the safety bicycle developed, but what gives such studies import is how those technologies then go on and affect the world. If the effects of new technologies are a matter of indifference, the process by which they are created isn’t an especially important research question.

2) The term closure suggests complete acceptance and permanence of a technology; I would argue, however, that closure is never perfect in either of these ways. For one thing, some social groups may never accept the dominant definition of a technology. Controversy over the nature of radio, it is argued, was effectively closed when the corporate, advertising-based structure with which we are all familiar turned it into a broadcast medium. However, from time to time stakeholder groups have developed which did not accept this form of radio, whose interest was in using radio as a one to one form of communication (thus, the CB craze of the 1960s and 1970s, or the use of HAM radios since the medium’s inception), or in simply opposing the regulation of the airwaves which supports the corporate domination of the medium (pirate radio stations). To be sure, these groups are marginal and do not pose a serious threat to the general consensus as to what radio is; however, they illustrate the point that closure is not complete, that relevant social groups will continue to exist even after debate about the nature of a technology seems to have been closed.

Even more telling is the fact that technological research and development continues even after conflict over a specific artifact seems to have been closed. In its earliest form, radio programming included educational and cultural programs and entertainment, including all of the genres (comedy, western, police drama, etc.) which we now take for granted. However, when television began to become widespread in the 1950s, it replaced radio as an entertainment medium; people could see their favourite stars as well as hear them! Many of radio’s most popular performers (Jack Benny, Burns and Allen, et al) moved to television. The introduction of television opened up the debate about what radio was, a debate which had seemed closed for over 20 years; stakeholders had to find a new definition of the medium (which they did, moving towards all music and all news formats). Thus, even though the technology itself had not changed, I would argue that radio was a different medium after the introduction of television than it had been before.

A more dramatic example is the emergence of digital radio transmitted over the Internet. Many stations which broadcast via traditional means are, at the same time, digitizing their signals and sending them over the Internet. On the one hand, this increases their potential listenership (although the value of this is debatable given that most radio stations’ advertising base is local). On the other hand, it opens radio up to competition from individuals who can set up similar music distribution systems on their personal Web pages. Where this will lead is anybody’s guess. For our purposes, it is only important to note that digital distribution of radio signals opens up, again, the seemingly closed debate about what radio is. (In a similar way, I hope that this dissertation will show that online distribution of text has opened up the debate about publishing technology which seemed to have been closed when the printing press established itself in Europe 500 years ago and especially as it became an industrial process in the nineteenth and twentieth centuries.)

These are, for the most part, conservative changes in Hughes’ sense, where “Inventions can be conservative or radical. Those occurring during the invention phase are radical because they inaugurate a new system; conservative inventions predominate during the phase of competition and system growth, for they improve or expand existing systems.” (1987, 57) Nonetheless, even conservative changes reintroduce conflict over the meaning of a technological artifact which had been considered closed.

3) Constructivist accounts of technological creation tend to be ahistorical. To allow that new technologies have developed out of existing technologies would be to admit that closure is an imperfect mechanism, as well as possibly requiring the recognition of some deterministic effects in the way old technologies create social problems which require the creation of new technologies to solve. However, new technologies frequently develop out of combinations of existing theories and technologies.

Synthesis: Mutual Shaping

Technological determinism and social constructivism suffer from a similar problem: they both posit a simple, one-way relationship between technology and society. With technological determinism, technology determines social structure. With social constructivism, social conflict ultimately determines technology.

I would suggest that the situation is more akin to a feedback loop: the physical world and existing technologies constrain the actions which are possible for human beings to take. Out of the various possibilities, stakeholder groups vie to define new technologies. Once the controversy has died down, the contested technology becomes an existing technology which constrains what is now possible. And so on. (See Figure 1.1) We can determine the positions of constructivists by analyzing their stated claims and actions; we can construct the effects of determinism, however provisionally, by linking consensus about the shape of technology to wider social trends.

Boczkowski calls this process “mutual shaping.”

Often, scholars have espoused a relatively unilateral causal view. They have focused either on the social consequences of technological change, or, most recently, on the social shaping of technological systems. Whereas the former have usually centered upon how technologies impact upon users’ lives, the latter have tended to emphasize how designers embed social features in the artifacts they build. In this sense, the process of inquiry has fixed either the technological or the social, thus turning it into an explanans rarely problematized. However, what the study of technology-in-use has ultimately shown is that technological and social elements recursively influence each other, thus becoming explanans (the circumstances that are believed to explain the event or pattern) and explanandum (the event or pattern to be explained) at different periods in the unfolding of their relationships.” (1999, 92)

This feedback model is roughly the situation described by Heather Menzies in the quote above. It is also hinted at in some of the work of pure social constructivists. For instance, Bijker writes that “A theory of technical development should combine the contingency of technical development with the fact that it is structurally constrained; in other words, it must combine the strategies of actors with the structures by which they are bound.” (1995, 15) Surely, the structural constraints Bijker alludes to must include existing technologies; but this would allow deterministic effects in by the back door, so he cannot pursue the line of thought.

Mutual shaping solves the problems faced by each of the theories taken on their own. It explains what happens before a technology is introduced into a society and after it has been shaped by various stakeholders. It allows us to understand what happens if different technologies are sent out to compete in the marketplace. (“Empirical studies informed by this conceptual trend have revealed that users integrate new technologies into their daily lives in myriad ways. Sometimes they adapt to the constraints artifacts impose. On other occasions they react to them by trying to alter unsuitable technological configurations. Put differently, technologies’ features and users’ practices mutually shape each other.” (ibid, 90))

Figure 1.1

The Iterative Relationship Between Society And Technology

Adapted from Boczkowski, 1992.

We can also use this way of looking at the relationship between technology and society to go beyond the concept of closure, which doesn’t seem to account for the way definitions of technology continue to be contested. I would suggest that technologies go through periods of “stability,” periods where the definition of what the technology is is widely (though perhaps not universally) agreed upon, and where the form of the artifact does not change. Stability roughly corresponds to the period in Figure 1.1 between when a technological change is introduced into a society and the recursively triggered mediations which lead to the creation of new technologies. Periods of stability can last centuries or a matter of years.

Mutual shaping is not likely to satisfy deterministic or constructivist purists. However, those who are less dogmatic about such things (for instance, what Paul Levinson might call “weak determinists” (1997)), may be willing to allow some of the effects of the other theory, to their benefit.

It isn’t necessary to equally balance deterministic and constructivist effects in every study of technology. Those who are most interested in social effects will emphasize certain aspects of the relationship between society and technology; those interested in technological development will emphasize other aspects. The important thing is to recognize that both processes are at work in a larger process, and to make use of one theory when it will help illuminate the other.

In this dissertation, for example, I will take a primarily social constructivist approach. For this reason, I have to answer at least two questions. 1) What are the relevant social groups/stakeholder groups and what is the technological frame which they use to define their interest in the technology? 2) How are conflicts between stakeholders resolved en route to the stability of the technological artifact? The final section in this chapter will give a brief overview of the stakeholders in traditional and electronic publishing. The body of the dissertation will then elaborate in much greater detail on the interests of specific stakeholder groups. What I hope to accomplish is a “thick description” of the social forces which are at work shaping this particular use of this particular computer mediated communication technology, and what various possible configurations of the technology do and do not allow different stakeholder groups to achieve. Thick description involves “looking into what has been seen as the black box of technology (and, for that matter, the black box of society)… This thick description results in a wealth of detailed information about the technical, social, economic, and political aspects of the case under study.” (“General Introduction,” 1987, 5) Where necessary, such description will include the possible effects of specific forms of technology on individuals and their social relationships.

Before I do that, it is useful to consider a brief history of the technologies involved in digital publishing, which I do in the next section. These histories are by no means definitive. My intention is not to close off debate about what the technology is before it has even begun. Instead, I hope to set the table for the reader, to give a brief indication of how we got to the point where the conflicts described in the rest of the dissertation are possible.

Electronic publishing comes at the intersection of two very different technologies: the printing press, over 500 years old, and the digital computer, over 50 years old. This section will look at some of the relevant history of the two technologies.

The Printing Press

Imagine a pleasant summer’s day in 1460. Through the window of your monastery in the German countryside, you can see fluffy white clouds in a pale blue sky. But you only catch fleeting glimpses of the world outside, because you are engaged in serious business: the transcription of a Latin text. You work in what is known as a “scriptorium:” your job is to take an ancient text and copy it, word for word. The work is detailed, painstaking; a single text will take several years for you to complete (after which you must hand it over to the illustrators and illuminators, who will take additional years to perfect the volume). You are not allowed to emend the text in any way, and you certainly are not allowed to comment on what you are reading; the quality of your work will be based solely on its fidelity to the original. Yet, the work is satisfying, because you know that your small efforts are part of a great project to keep knowledge alive during a period of intellectual darkness.

If you are lucky, you will go to your grave without learning that the world you believe you live in no longer exists.

Five years earlier, in 1455, the son of a wealthy family from Mainz, Johannes Gutenberg, published what was known as the 42 line bible (because each page was made up of 42 lines). The work itself was of generally poor quality; it wasn’t nearly as esthetically pleasing as a hand-copied bible. Nonetheless, it may be the most significant book in publishing history because it is the first book historians acknowledge to be printed with a press.

The Gutenberg printing press consisted of two plates connected by a spring. On the bottom plate was placed the sheet of paper on which text would be imprinted; the top plate contained metal cubes from which characters (letters, punctuation marks, blanks for spaces between words) were projected. The surface of the upper plate was swathed in ink; when pressed onto the paper on the bottom plate, it left an impression of the text. The spring would then pop the upper plate back in place so that another piece of paper could be placed underneath it. And another. And another. Whereas a monk in a monastery would takes years to complete a single volume, those who ran a printing press could create several copies of an entire book in a matter of days.

Gutenberg’s press was not created out of thin air, of course. The press itself was a modification of an existing device used to press grapes to make wine. Furthermore, the concept of imprinting on paper had existed for thousands of years: woodcutting, where text and images were carved into blocks of wood over which ink would be spread so that they could be stamped on paper, had existed in China for millennia. Gutenberg’s particular genius was to take these and other ideas and put them together into a single machine.

One of the most important aspects of the printing press was moveable type (which, again, had existed in Asia for perhaps centuries before Gutenberg employed it); instead of developing a single unit to imprint on a page (as had to be done with woodcuts), the Gutenberg press put each letter on a cube, called type. The type was lined up in a neat row, and row upon row was held firmly in a frame during printing. Woodcutting had a couple of drawbacks: it was slow, detailed work, which meant that publications with a lot of pages would take a long time to prepare. Furthermore, if a mistake was made, the whole page had to be recut. Pages of moveable type, on the other hand, could be developed quickly, and mistakes caught before publication required the recasting of a single line rather than the recreation of an entire page.

It is not an exaggeration to say that printing modeled after Gutenberg’s press exploded: printing is believed to have followed the old trade route along the Rhine river from Mainz: Strassbourg soon became one of the chief printing centers of Europe, followed by Cologne, Augsburg and Nuremberg. Within 20 years, by the early 1470s, printing had reached most of Europe; for example, William Caxton set up his printing shop in Westminster Abbey in late 1476. Within about 100 years, printing presses had been set up throughout the world: by 1540, there was a print shop in Mexico, while by 1563, there was a press in Russia. The fact that it took a century for the printing press to travel throughout the world may not impress modern people who are used to hardware and software being updated on a virtually daily basis; however, given the crudity of transportation facilities and lack of other communications networks at the time, the speed with which the press developed was astonishing. Of course, wherever the printing press went, a huge increase in the number of books available soon followed. Within a couple of decades, most monasteries were relieved of their responsibility for copying books and the scriptoria were closed down, although some held out for as much as a century.

The printing press had almost immediate effects on the social and political structures of the day. The scriptoria concentrated on books in Latin, a language which most laypeople (who were illiterate) did not understand; interpretation of the word of god, and the power over people which went with it, was jealously guarded by the Church. That institution’s monopoly of knowledge (to use Innis’ insightful phrase) was all but airtight. Soon after the printing press began to spread, copies of the bible and other important religious texts were printed in the vernacular of the people in various countries; it now became possible for individuals to read and interpret the bible themselves. This is believed to have been a contributing factor in the Protestant Reformation: Martin Luther’s famous challenge to the Catholic Church was accompanied by the widespread dissemination of inexpensive copies of the Bible in languages people could understand.

From the perspective of the current work, one important aspect of the printing press (although it would take hundreds of years to manifest) was the way it created a mystique around the individual author. The monks who copied manuscripts were not considered originators of the work, and are now almost completely forgotten. Before that, information was, for the most part, transmitted orally. While some cultures developed rich histories through the stories which were passed down from generation to generation, they were not considered to be the work of any individual. The printing press, on the other hand, made it possible to identify works as the sole creation of an individual, since they went directly from the author to the printer.

Another important aspect of printing was that it made knowledge sufficiently uniform and repeatable that it could be commodified. Oral stories were considered the property of the tribe; because they often contained morals about acceptable and non-acceptable behaviour, it was important that everybody in the tribe know them. Printed books, especially when the printer (and, later, the author) could be identified, were seen as a source of income, and were immediately marketed as any other product.

Books also required a new skill of people: literacy. In the early days of print, only approximately 25% of men and far fewer women knew how to read. Many more had a command of “pragmatic literacy” (knowing how to read just enough to get by in their day to day lives), although how literate this made them is debatable. The people who could read were primarily clerics, teachers, students and some of the nobility; reading also quickly developed among urban residents in government or those involved with trade, law, medicine, etc. This latter trend is only logical: these were areas of knowledge where information was being published, and the ability to read was necessary to keep up with the latest writing in one’s field. Universal literacy only became an issue toward the end of the 19th century. At the time, some people argued that literacy would benefit people by giving them direct access to classical texts which would expand their minds. However, it has been argued that broader literacy was actually necessary for the industrial revolution, since workers needed to read technical manuals to better help them run the machines of the new age.

In the 18th century, methods of copper engraving made it possible to print sophisticated drawings on the same page as text. In addition, the move from wooden to metal presses meant an increase in how much could be printed: 250 pages an hour! In the 19th century, mechanical processes of paper production were introduced which further increased the production of paper 10- to 20-fold. (This was accompanied by a change in materials, from pulped rags to pulped wood, which helped speed up the process, but meant that the paper would begin to disintegrate after 50 or 100 years.)

In the 19th century, innovations in printing fixed the technology more or less as we know it today. In 1847, R. Hoe & Co. introduced the rotary press, where a flexible metal plate was rotated over a moving sheet of paper. It was inked after every turn, and paper was automatically fed into the machine as the plate turned. The rotary press was also the first press not to be run by human power: it was powered by steam, at first, and eventually by electricity. Unlike the hand press, which could only produce 300 to 350 sheets a day at that time, a power rotary press could print from 12,000 to 16,000 sections in the same day. These improvements led to an explosion of printed material which continued unabated through the 20th century: “There might be a panic about the passing of print and the growing popularity of the screen, but the reality is that more books are being published now [in 1995] than ever before.” (Spender, 1995, 57)

For more on the history of the printing press, see Lehmann-Haupt (1957), Butler (1940), Katz (1995) and Pearson (1871).

The Digital Computer

To fight World War II, the Allied Forces developed bigger and bigger artillery to deliver larger and larger destructive payloads to the enemy. However, the army faced a difficult problem: how to ensure that the shells hit their targets? Calculating rocket trajectories was an extremely complicated process which involved the weight of the vehicle, the terrain over which it was to travel, fuel consumption, velocity, weather conditions, the speed of the moving target and a host of other variables. Human beings computed these trajectories, but their work was slow and riddled with errors. This meant that enemy aircraft were virtually free to roam Allied airspace at will. What was needed was a mechanical device which could calculate trajectories much more quickly, and with a much smaller margin of error than human computers.

The answer, of course, was the development of mechanical computers.

This problem had plagued armies since the 19th century, and one solution had, in fact, been proposed at the time. In 1822, Charles Babbage proposed a mechanical device which could calculate sums which he called a “Difference Engine.” The Difference Engine used the most sophisticated technology available at the time: cogs and gears. As designed, it would have calculated trajectories much faster than human beings, and, since Babbage designed a means by which the calculated tables could be printed directly from the output of the Difference Engine (an early form of computer printer), human error was all but eliminated. The Difference Engine had a major drawback, however: it could only be designed to calculate the solution to one mathematical problem. If conditions changed and you needed the solution to a different mathematical formula, you had to construct a different machine.

Understanding the problems with this, Babbage went on to design something different, which he called the “Analytical Engine.” The Analytical Engine employed a series of punched cards (perfected in 1801 by Joseph-Marie Jacquard for use in automating looms for weaving) to tell the machine what actions to perform; the action wasn’t built into the machine itself. The alternation between solid spaces and holes in the card are a type of binary language, which is, of course, the basis of modern computers. With the Analytical Engine, branching statements (if x =10, then go to step 21) became possible, giving the machine a whole new set of possible uses. The first person to develop series of cards into what we would now call “programs” was Lady Ada Augusta, Countess of Lovelace (daughter of the poet Lord Byron).

Neither of the machines envisioned by Babbage was built in his lifetime. However, his ideas can, in retrospect, be seen as the precursors to the first digital computer, the ENIAC.

Research in the United States leading to the creation of ENIAC (Electronic Numerical Integrator and Calculator) began in 1943. When completed, the machine consisted of 18,000 vacuum tubes, 1,500 relays 70,000 resistors and 10,000 capacitors. ENIAC was huge, taking up an entire large room. It had to be built on a platform, its wires running beneath it. The machinery was so hot that the room had to be constantly kept at a low temperature. Ironically, given its intended purpose, ENIAC didn’t become fully functional until after the war had ended in 1945.

At around the same time, researchers in England were developing a different digital machine in order to solve a different military problem. German orders were scrambled by a machine code named “Enigma;” if the Allies could break the German code, they could anticipate German actions and plan their own strategies accordingly. A key insight in this effort came from a brilliant English mathematician, Alan Turing: he realized that any machine which could be programmed with a simple set of instructions could be reproduced by any other machine which could be so programmed. This has come to be known as the Turing Universal Machine (the implication being that all such machines can be reduced to one machine which can do what they all could do). The original designs of the “Ultra,” Britain’s decoding machine, were electromechanical, calling for mechanical relays (shades of Babbage!), but they switched to electronic relays as the technology became available. This work was an important part of the war effort.

These two threads of research paved the way for modern computers, but a lot of changes had to be made before we could achieve the systems we have today. One of the first was the development of transistors out of semiconducting materials at AT&T in the 1950s. Replacing vacuum tubes with transistors made computers smaller, faster and cheaper. However, the transistors had to be wired together, and even a small number of faulty connections could render a large computer useless. The solution was the creation of the integrated circuit, developed separately by Jack Kilby of Texas Instruments and Robert Noyce of Fairchild Semiconductors; this put all of the transistors on a single wafer of silicon. This has led to generation after generation of smaller and faster computers as more and more transistors are packed onto single circuits.

The very first computers had toggle switches on their fronts which had to be thrown by users at the right time. These were soon replaced by piles of bubble cards which contained the program to be run and the data to run it on. You sent the cards to a data center, where your programme would be put at the end of a line with the programmes of others and run in its turn; the next day, you would get a printout of your programme and see if it, in fact, worked. An important development in the history of mainframes, as these room-sized computers were called, was the concept of time-sharing: instead of running programmes in sequence, 10 or 15 programmers could use the mainframe at the same time. Moreover, by using keyboard inputs rather than punch cards, programmers could communicate directly with the machine.

Still, to use a computer, you had to be trained in its language. Two very important technological developments had to occur before computers could be used by untrained people: the mouse and the iconic visual display. The visual display allowed computer users to see what they were doing on a screen. By using a mouse for input, users could point at an icon on the screen which represented what they wanted to accomplish and click on it to command the computer to do it. This more intuitive method of using computers was developed in the 1960s by Douglas Englebart at the Augmentation Research Center (although practical working models weren’t developed until the late 1960s and early 1970s at a different institution, Xerox Palo Alto Research Center, or PARC).

In the late 1970s, Apple Computer introduced its first desktop personal computer. Their machine was a unit small enough to fit on a person’s desk; it included a screen, keyboard and mouse. Unlike the International Business Machines computers of the time, Apple’s computers were created to be used by individuals, not businesses. Unlike machines for computer hobbyists (such as the Altair), Apple computers did not require engineering knowledge to assemble. The company’s biggest breakthrough came in 1984, with the popularity of the Macintosh.

A single computer can be powerful enough, but connecting computers together not only creates an even more powerful computing entity, but it also gives computers a new function: tools with which to communicate. This was the impetus for the American Department of Defense’s Advanced Research Projects Agency (ARPA) to develop methods of connecting computers together. This research in the 1960s and 1970s led to the creation of ARPANet, a computer network designed to link researchers (primarily on military projects, although it soon expanded beyond this group) at educational institutions.

Computer networks are based on an architecture of nodes and a delivery system of packets. Nodes are computers which are always connected to the network through which digital messages flow. Information from a computer is split up into packets, each with the address of the receiver; each packet is sent through the system separately, and recreated as the original message at the receiver’s computer. The system is designed so that each packet flows by the quickest route possible at the time it was sent; in this way, various parts of a message may flow through a variety of different nodes before reaching their destination. Legend has it that ARPANet was designed to withstand a nuclear attack: if some nodes were knocked off the system, messages could still get through by finding the fastest route through whatever nodes remained.

As the technology spread, other computer networks began developing. By the 1980s, a movement to connect all of these networks had developed, which led to what we now know as the network of networks, the Internet.

ARPANet had originally been designed to allow researchers to share their work by making papers and other documents available. Early designers were surprised to find that the technology was used primarily for personal communications, yet email was, and remains, one of the most popular uses of computer networks.

By the 1990s, huge amounts of information were becoming available on the Internet. This created a serious problem: how to find what one wanted in the mountainous volume of information available. Some tools, such as Archie and Gopher, had been created to search through indexes of material. However, a different approach to information was sought to alleviate this growing problem, an approach for which the theoretical groundwork had been laid in 1945 by a pioneer in computing research, Vannevar Bush.

In an article titled “How We May Think” which first appeared in The Atlantic Monthly, Bush described a machine, which he called a Memex, which could call up documents requested by a user. The user could then annotate the documents, store them for future use and, perhaps most importantly, create links from one document to another which made explicit the connections between them which had been made by the user. The Memex was an unwieldy mechanical device which was never produced, but the article suggested a new way of organizing at information.

This concept was developed in the 1960s by a man named Theodore Nelson. He suggested a computer system where documents contained links to other documents. By clicking on the link, a window would be opened up which contained the text referred to in the first text. Of course, the second text would have links to tertiary texts, which would have their own links, and so on. Nelson named his system Xanadu, a reference to a Coleridge poem. His ideas circulated on the Internet throughout the 1960s and 1970s; by the time they were published in the 1980s as Literary Machines, Nelson had worked out an elaborate system, which he called hypertext, which included payment schemes for authors, public access and human pathseekers to help users

find useful information.

The electronic Xanadu was never built; it was superseded by a similar, although slightly less ambitious system: the World Wide Web. In the early 1990s, Tim Berners-Lee and researchers at the Conseil Europeen pour la Recherche Nucleaire (the European physics laboratory known as CERN) developed HyperText Mark-up Language (HTML). HTML allowed links from one document to another to be embedded into text; links were highlighted (often in blue) to indicate that they were active. Using a browser (the first of which, Mosaic, was developed by Marc Andreessen at the American National Centre for Supercomputing Applications), computer users could click on interesting links and be immediately transported (electronically) to the document. HTML has a variety of useful features: it is simple enough to be written in a word processing programme; in its original form, it took very little time to learn; it was designed to be read by whatever browser a user employed; and so on. Web browsers (today primarily Microsoft’s Internet Explorer and Netscape Navigator) also have a lot of useful features: users can store links; they can access the HTML code of any page (if they find a use for HTML which they would like to employ); etc.

For more on Charles Babbage, see Moseley (1964) and Rowland (1997). For more on the development of computers, see Rheingold (1985 and 1993), Selkirk (1995), Rowland (1997) and Edwards (1997). For more on the history of hypertext, see Bush (1945) and Nelson (1992).

Traditional academic work based on the scientific method requires the researcher to start with a well-formulated question, gather evidence relevant to the problem and offer a solution to the question. The traditional method is useful when the problem to be solved has well defined parameters, which usually occurs in what Kuhn calls “normal science.” (1962)

Unfortunately, these are not the conditions under which research on computer mediated communications networks, and especially the World Wide Web, is conducted. Although various researchers are fruitfully borrowing ideas from their home disciplines, there is no paradigm (set of values, methods, research questions, et al) for this kind of communications research. In truth, we don’t know what the proper questions are, let alone the most appropriate method(s) of answering them. The literature on the subject is growing in a variety of directions without, to this point, cohering in a single agreed upon way.

For this dissertation, I have borrowed a technique from social constructivism called “snowballing.” Start with any single stakeholder group in a developing technology. It will become apparent that other stakeholder groups either support or, more often, conflict with this group. Add them to your list of research subjects. Iterate until you have as complete a picture of all of the stakeholders as possible. Starting with individual fiction writers, I expanded the scope of the dissertation to include other stakeholders whose interests may affect those of the writers.

While the strength of the traditional method is the way it shows the causal relationships between variables, the strength of the snowball method is that it helps uncover a web of relationships. This makes it ideal for research into the interactions between individuals and/or social groups. A weakness of this method is that, sooner or later, everything is related to everything else, making the whole of human experience a potential part of one’s research. While the traditional method carefully delineates its subject, this method does not. It becomes important, then, to ensure that all of the stakeholder groups which become part of a research project are, in fact, related to a central object. Typically with social constructivist studies, the technology under dispute becomes the one factor which binds all of the groups together in their web. For the present study, I narrowed the focus further: stakeholder groups were chosen because they were affected by or affected the interests of the individual writers who use the specific technology (the Web).

Further, rather than focusing on where research is taking place, the traditional site of social constructivist studies, I intend, instead, to focus on what Schwartz Cowan calls the “consumption junction,” the point where technology is actually used. “There are many good reasons for focusing on the consumption junction,” she writes. “This, after all, is the interface where technological diffusion occurs, and it is also the place where technologies begin to reorganize social structures.” (Schwartz Cowan, 1987, 263) Or, to put it another way, the consumption junction is the place at which the mutual shaping of technology and society is most apparent.

Focusing on the consumption junction seems, at first glance, to ignore all of what traditionally is considered necessary to understand technological development: processes of invention, innovation, development and production. Instead, it focuses on the importance of the diffusion of a technology into society. However, as Schwartz Cowan argues, “Most artifacts have different forms (as well as different meanings) at each stage in the process that ends with use, so that an analysis that ignores the diffusion stage does so only at its peril. In any event, a consumer-focused analysis that deals properly with the diffusion stage can also shed important light on invention, innovation, development, and production.” (ibid, 278/279)

This last point is invaluable. The mutual shaping model which I posited above can be read to show that consumers of a technology give feedback to its producers. This occurs not only in terms of whether they accept or reject it, but the circumstances under which it is used; the telephone, for instance, was considered by its creators as primarily a tool for business, until women took it up in large numbers in the home and turned it into an instrument for personal communication. Moreover, consumers can have a direct effect on the shape of a technology, as when consumer groups threaten to boycott a producer unless action is taken to change a specific product, or as when they simply refuse to use an innovation, as we shall see in Chapter Three’s exploration of “push” technology. “Thus different using practices may bear on the design of artifacts, even though they are elements of technological frames of non-engineers.” (Bijker, 1987, 172)

In a sense, it does not matter which part of the mutual shaping process one takes as a starting point. One must work both backwards and forwards in the loop to give the fullest possible picture of how a technology develops. Starting from a point of consumption, as I intend to, one can then work backwards to give a sense of how the technology developed before it was put on the market to be consumed, as well as moving forward to show how the consumption of the technology may affect the next iteration of the technology, as well as other existing technologies.

In this case, the important process is the one by which the work of writers is purchased — or otherwise consumed — by readers. Complex systems of publishing have evolved in order to achieve this transfer of knowledge. The most important stakeholders in publishing will be those groups which are directly involved in this process.

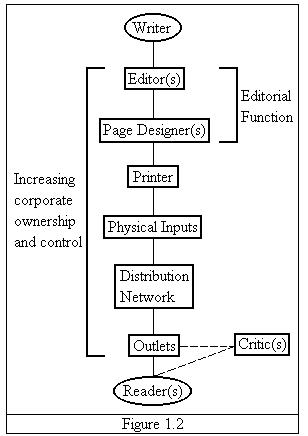

Determining the stakeholders in traditional publishing is relatively easy, given that the technology is well established (see Figure 1.2). It starts with an individual with a story to tell, a writer. The writer submits this story to a publisher (whether of magazines or books). The publisher function can be divided into at least two specialties: an editor or series of editors who work with the text of the story; and a designer or series of designers who take the text and develop its visual presentation on the printed page.

Once a book or magazine has been designed, it goes to a printer, whose job is to make copies of it. This requires physical inputs: paper and ink, obviously, but also staples and glue (or other binding materials) and even electricity (to run the machines). Once the book or magazine has been fixed in a physical form, it must then be distributed to readers. A distribution network must be established (which may include trucks for local distribution and trains or even planes for national or international distribution). Sometimes, as with mail order books, the distribution network is sufficient. However, most people buy books from retail outlets (which include card shops and general stores as well as stores specializing in books).

All of the functions described here, the various people who work on a manuscript after it leaves the hands of a writer and before it reaches a reader, are increasingly being concentrated in the hands of a smaller number of large corporations. In fact, as we shall see in Chapter Three, many are owned by the very largest of transnational entertainment conglomerates.

Figure 1.2

Stakeholders in Print Publishing

The reader is the final link in the publishing chain. Readers may know exactly what they are looking for when they enter a bookstore (for instance, the latest book by a favourite author). However, more often, they require help to know what might interest them out of the vast number of books which are available to them. For this reason, a critical establishment often intercedes between the book buyer and the outlet (represented by a broken line in Figure 1.2 to indicate that it isn’t always necessary).

Every person who performs one of these functions has a stake in the publishing industry as it is currently configured.

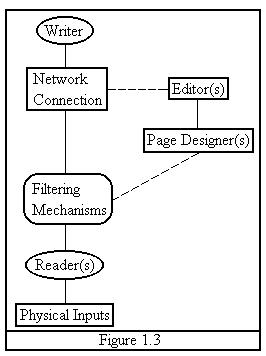

Contrast this with how stories are distributed over digital networks (Figure 1.3). We start, once again, with an author with a work. The author requires a computer and a physical connection to the World Wide Web. This physical connection might be over phone lines, cable modem or some form of wireless Internet connection, such as a satellite dish. It must also include an Internet Service Provider.

Figure 1.3

Stakeholders in Online Publishing

The writer can put her or his material directly onto a Web page. Many, however, are publishing their material in electronic magazines, or ezines. With a personal Web page, the writer is her or his own editor and page designer. With ezines, additional people

will (probably) edit the story and design the Web page on which it will appear (represented by a broken line, again, to indicate that they are not always necessary).

Unless the reader is a close personal friend of the writer, she or he will require some sort of filtering device (most often a search engine, although other devices are currently being developed – see Chapter Five) to find material she or he will enjoy.

The computer has been hailed in the past as the means by which we would achieve a paperless world. So far, this has not happened. Most people find computer screens difficult to read off of for any length of time. When confronted with long passages of text, most people print them so that they can be read off sheets of paper. For this reason, the physical inputs shift from being the responsibility of the printer to that of the reader.

This quick look at the stakeholders in traditional and online publishing is provisional: we must, in our investigations, be open to the possibility that other stakeholders will be uncovered, or that what appeared at first blush to be a relevant social group was either not a definable social group or not relevant. Nonetheless, this gives an initial shape to our inquiries.

Chapter Two will tell the story of writers who choose to place their fiction on the World Wide Web. It is the story of an eighteen year-old who “started off writing self indulgent teenage poetry and funny essays to make my teachers laugh” and “ran away with the circus to go to film school, where i studied a lot of screenwriting.” (Poulsen, 1998, unpaginated) It is also the story of “a retired chemistry professor, whose writing was confined to technical journals until two years ago, when I began writing fiction as a kind of hobby.” (Steiner, 1998, unpaginated) In addition, it is the story of a 61 year-old man who has published “two novels in the U.S. one with Grove Press and the other with Dalkey Archive Press…, poetry in various journals including Beloit Poetry Journal [and] articles in The Review of Contemporary Fiction.” (Tindall, 1998, unpaginated) What unites these, and the other people surveyed for this dissertation, is the desire to write fiction and have it read.

However, as we shall see, these writers belong to different sub-groups whose aims and interests are not always the same. These groups are: writers who put their fiction on their own Web sites; writers who have their fiction publishing in electronic magazines; writers of hypertext fiction, and; writers of collaborative works of fiction. Along the way, we shall identify, and explore, an important related stakeholder group: editor/publishers of online magazines.

Many of the writers and editors surveyed for Chapter Two claim varying degrees of interest (ranging from curiousity to concern) in the possibility of generating income from the publication of their writing on the Web. In response to this concern, the economics of information will be the broad subject of Chapter Three. In that chapter, I will delve into the issue of what information is worth, and consider the application of various economic models to information delivered over the Internet. In order to properly explore these issues, another stakeholder group will have to be considered: the transnational entertainment conglomerates which have an interest in both exploiting the Web for their own profit and ensuring that it doesn’t seriously interfere with the revenues which they can generate from their stake in existing media. In this chapter, I also intend to show the efforts the corporations have made to shape the new medium to their advantage; in so doing, I hope to make it clear that the interests of these corporations are often in conflict with those of individual writers.

Another concern of many of the writers surveyed in Chapter Two was the effect government intervention could have – in both positive and negative ways – on their ability to publish online. Chapter Four will look at the role governments have in Web publishing. This includes: extending traditional financial support for the arts to the new medium of communication; and, enforcing legal frameworks such as copyright (an issue which, as we shall soon see, is of concern to many of the individual authors). The chapter will also look at a couple of barriers to effective government legislation: the international nature of the medium, which, because it crosses national borders, plays havoc with traditional notions of jurisdiction, and; the chameleon-like nature of digital media, which does not fit comfortably into traditional models of communication, and, therefore, does not fit comfortably into traditional models of communication regulation.

As we saw in Figure 1.2, many different groups of people are involved in the production of print books and magazines. To the extent that online publishing threatens to change the nature of publishing, those who work in print publishing industries should be considered stakeholders in the new medium. Chapter Five will look at some of these other stakeholders who will be affected by (and may affect) the new technology. These include: publishing companies; designers; bookstores; and, critical online filtering mechanisms.

By the end of Chapter Five, we will hopefully arrive at an understanding of the complexity of the various interests involved in this new technology. Chapter Six, the conclusion, will attempt to synthesize these interests so that we may better understand how the conflicting interests of stakeholder groups affects the development and use of a new technology. In addition, I will revisit the theoretical question of the relationship between technology and society. The dissertation will conclude with a cautionary story about the history of another technology which seemed allow individuals to communicate with each other: radio.